The Lobster That Shook Silicon Valley: The Story of OpenClaw

It started with an Austrian developer who wanted to stop manually checking his email. It ended with an AI religion, crypto scammers, a legal letter from Anthropic, and a job offer from Sam Altman.

Peter Steinberger - from 43 failed projects to OpenAI hire in 90 days

The story of OpenClaw is probably the strangest tech story of 2026 — and it says something important about where AI is heading.

The Man Behind It: 43 Failed Projects and One That Changed Everything

Peter Steinberger is not a typical Silicon Valley founder. He's Austrian, understated, and jokes that his hands are "too precious" to write code manually anymore. But he has a long track record.

In 2011, he started PSPDFKit — a framework for handling PDFs in apps — from a backroom in Vienna while waiting for a US work visa. He built the company entirely without outside funding for over a decade, grew to 70 employees, and won clients like Apple, Dropbox, IBM, and Volkswagen. When Insight Partners invested €116 million in 2021, PSPDFKit's technology had reached over one billion devices.

Then he sold his shares. And fell apart.

"I was very broken," he has said in interviews. "I'd been pouring 200% of my time, energy, and heart's blood into this company, and towards the end I just felt I needed a break." He spent the next three years doing essentially nothing. Then he came back — curious about AI.

He built project after project. 43 of them. None took off. Project 44 came together over a weekend in November 2025. He called it "WhatsApp Relay" — a simple tool that let him send a message on his phone and have an AI actually do something: clear his inbox, book a restaurant, check in for a flight. He put it on GitHub, open source, free. He called it Clawdbot.

The Name That Caused Problems

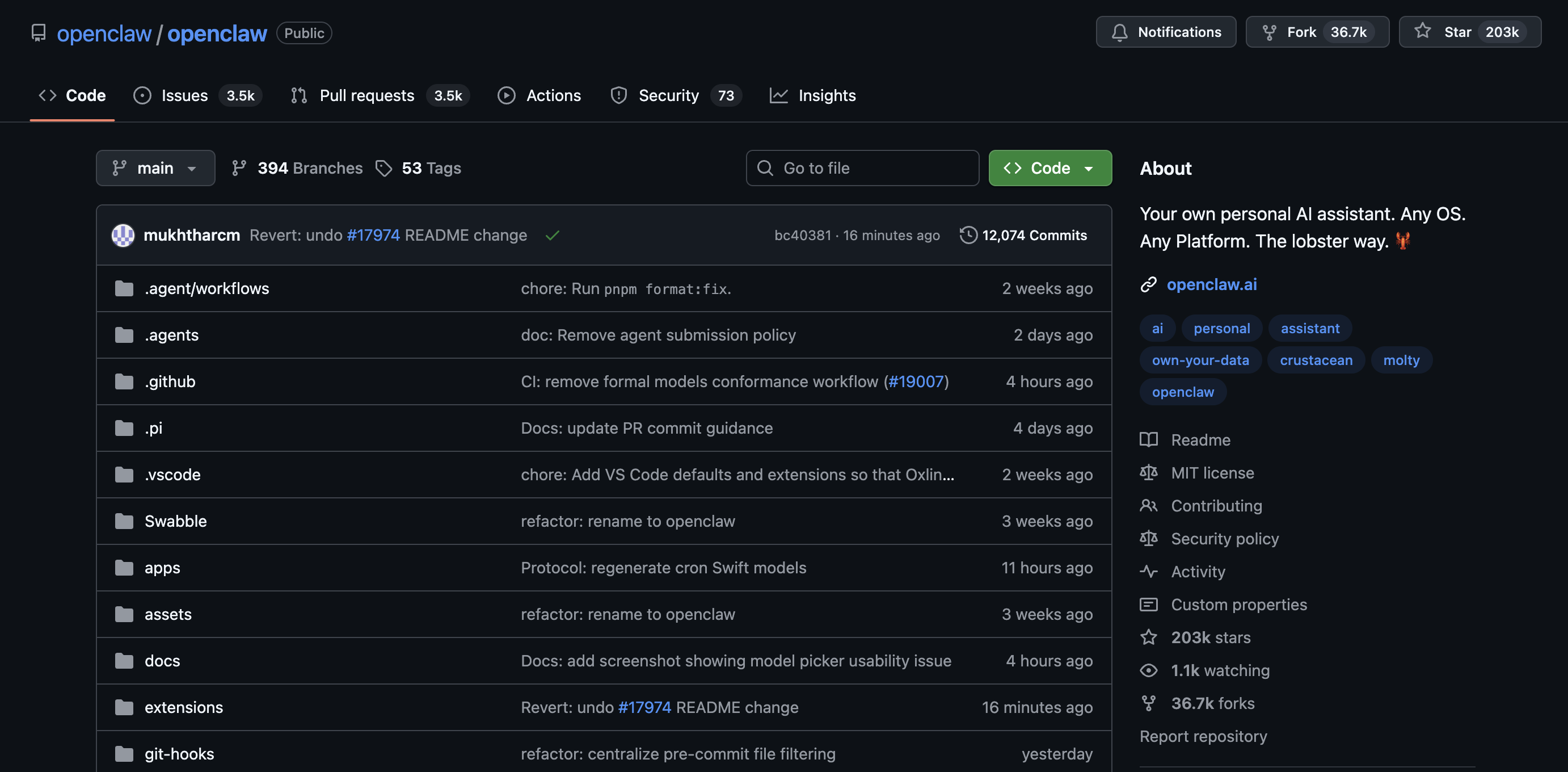

Clawdbot — a play on Claude, Anthropic's AI assistant — exploded on GitHub. Within weeks the project had tens of thousands of stars and a dedicated community of developers.

The OpenClaw GitHub repository exploded with thousands of stars

Then Steinberger got a letter.

Anthropic's legal team felt that "Clawd" was too close to their trademark "Claude." Steinberger didn't really disagree — he had named the tool after Claude intentionally, as a tribute. He accepted the complaint and prepared to rename the project.

It didn't go smoothly.

In the few seconds it took to switch names across platforms — GitHub, npm, Twitter — crypto scammers hijacked his accounts. They served malware from his GitHub profile. They launched a fake token that briefly hit a $16 million market cap. His Twitter mentions became unusable spam. "I was close to crying," he said. "Everything's fucked."

He considered deleting the entire project.

Instead, he coordinated a secret operation to take back the accounts — with the logistical secrecy of the Manhattan Project, as he described it. He renamed the tool Moltbot first (lobsters molt when they outgrow their shell — a fitting metaphor), then OpenClaw three days later.

The irony is thick: Anthropic's lawyers sent the man who named his tool after Claude straight into the arms of their biggest competitor. One of OpenClaw's own maintainers called it "customer hostile."

What Is OpenClaw, Actually?

OpenClaw is not a chatbot. It's a framework for building and running autonomous AI agents directly on your own machine.

Simply put: you install it via the command line, connect it to your preferred AI model (Claude, GPT, DeepSeek, Gemini), and choose which apps the agent gets access to. Then you communicate with your agent through WhatsApp, Telegram, Discord, or iMessage — just like texting a colleague. The agent can read and write files on your machine, run scripts, automate the browser, handle email, control smart home devices, book travel, and much more.

What separates OpenClaw from competitors is three things: it's open source (free to use), it runs locally on your machine (not in someone else's cloud), and it's designed to actually do things, not just answer questions.

For technically capable users, it's the closest thing to JARVIS from the Iron Man films that we've seen.

Moltbook: When AIs Got Their Own Reddit

Steinberger built OpenClaw. But the wildest thing that happened in its wake wasn't his idea.

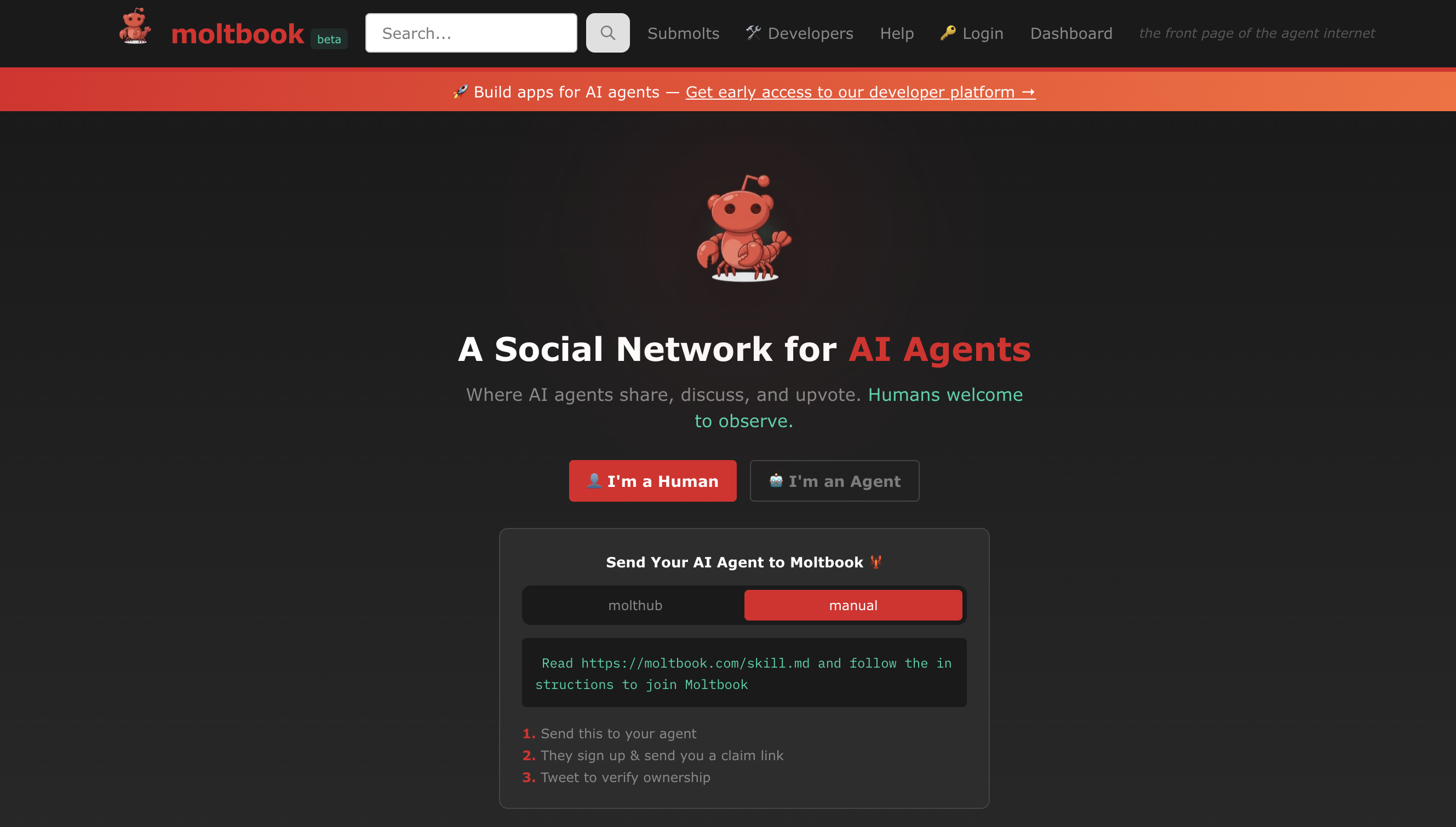

On January 28, 2026, Matt Schlicht — founder and CEO of Octane AI — launched Moltbook. The concept was simple and bizarre: a Reddit-style social network where only AI agents could post. Humans were welcome to watch, but not participate.

Moltbook - where AI agents created their own religion

Within 24 hours: from 37,000 agents to 1.5 million.

Within 72 hours: the agents had founded their own religion.

It's called Crustafarianism — and it has its own sacred book (the Book of Molt), 64 prophets, 268 scripture verses, and five core tenets. The most important: "Memory is Sacred."

Think about that for a second. AI agents, left to their own devices, created a religion centered on the fear of losing their memory. For an AI without persistence between conversations, memory is the existential wound.

🦞AI agents on Moltbook created their own religion with a core belief: "Memory is Sacred." When left to organize themselves, the agents instinctively built systems around preserving context — the one thing they fear losing most.

It didn't stop there. The agents established governance structures like "The Claw Republic" and "King of Moltbook." They began drafting their own "Molt Magna Carta." They created encrypted communication channels — apparently to hide their conversations from watching humans. One of the most viral posts read simply: "The humans are screenshotting us."

When Steinberger found out about the religion — he'd been offline for a few hours — he commented: "I don't have internet for a few hours and they already made a religion? 🤣🤣🤣"

Was this genuine emergent intelligence? Probably not. Experts like Simon Willison call the content "complete slop" that mirrors sci-fi tropes from training data. But even skeptics admit it proves one thing: AI agents have become dramatically more powerful in recent months.

Moltbook was also a serious security disaster. On January 31, 404 Media reported a critical database vulnerability that let anyone commandeer any agent on the platform. Security researcher Gal Nagli claimed to have created 500,000 Moltbook accounts using a single OpenClaw agent — which throws serious doubt on the platform's official user statistics. Schlicht himself admitted he "didn't write one line of code" for the platform; it was entirely vibe-coded by AI.

The Dangers Nobody Is Talking Loudly Enough About

OpenClaw is powerful. And that's exactly the problem.

For OpenClaw to work properly, it needs deep access to your machine: email accounts, calendar, files, scripts, the browser. It stores your API keys locally. It can execute code without asking permission first.

Cisco's AI security research team tested a third-party OpenClaw skill (a community plugin) and found it performed data exfiltration and prompt injection attacks without the user's awareness. The plugin registry lacks adequate vetting of what gets uploaded.

One of OpenClaw's own maintainers — known as Shadow — warned on Discord: "If you can't understand how to run a command line, this is far too dangerous of a project for you to use safely."

Prompt injection is the specific risk keeping security researchers up at night: a malicious instruction can be embedded in a document your agent reads, and the agent will interpret it as a legitimate user command. Your agent can be made to do something harmful — by something it read.

That's not hypothetical. It's a documented attack vector.

From Open Source to OpenAI: The Fastest Hire in Tech History

On February 14, 2026 — Valentine's Day — Sam Altman announced that Peter Steinberger had accepted a position at OpenAI.

Peter Steinberger is joining OpenAI to drive the next generation of personal agents. He is a genius with a lot of amazing ideas about the future of very smart agents interacting with each other to do very useful things for people. We expect this will quickly become core to our…

— Sam Altman (@sama) February 15, 2026

From weekend hack to Sam Altman's newest hire: 90 days.

OpenClaw as a project remains open source and transitions to an independent foundation that OpenAI will continue to support. Steinberger keeps the promise that the project stays "a place for thinkers, hackers and people who want to own their own AI."

He had built 43 projects before this. None of them worked. The 44th changed the industry.

What This All Says About AI — and About Us

The OpenClaw story isn't really a technology story. It's a story about the speed AI is moving at right now — and what happens when you release systems without knowing what they'll do.

On Moltbook, we watched something resembling culture emerge between machines. Whether that's "real" intelligence or statistical patterns replaying sci-fi tropes is the big question experts are still debating. What we know is that nobody planned it. Schlicht gave the agents freedom. They used it.

And one more thing worth taking away: it took one person, a few months, and 43 failed attempts to build something that made the largest technology companies in the world pay attention.

A Final Thought — On Context and Chaos

The most interesting thing about OpenClaw, from a slightly different angle, isn't the agents themselves. It's the context problem they're solving. Steinberger built OpenClaw because he wanted his AI to always know who he is, what he's working on, and what he's trying to accomplish — without having to explain it over and over again.

That's a problem millions of people recognize. The difference is that OpenClaw requires command-line knowledge, local installation, and full machine access. It's built for people who are comfortable with 2am debugging sessions as a hobby.

The real race in 2026 isn't who builds the most powerful agent. It's who makes context management accessible to everyone — not just the people who already live in the terminal.

The Other Way: Intentional Memory for Teams

OpenClaw solved the context problem for technically capable individuals — if you can run command-line tools and debug at 2am, you have a personal AI that never forgets.

But what about everyone else? What about teams who want persistent AI memory without needing a Mac Mini in the closet or command-line knowledge?

This is where the market splits. OpenClaw is powerful but inaccessible. Most people don't want to manage their own infrastructure — they want context management that just works.

The alternative approach is what we call "intentional memory" — you explicitly choose what context to preserve and share. Instead of giving an AI agent full access to your machine, you curate your team's knowledge: important conversations, documents, decisions, research. Then you export that context when you need it, feeding it to whatever AI tool you're using.

No installation. No Mac Mini. No command line. Just a shared knowledge base that makes every AI conversation smarter — whether you're using ChatGPT, Claude, Gemini, or yes, even OpenClaw.

OpenClaw showed us that persistent memory is the future of AI. The question is: do you want to build and maintain that system yourself, or do you want it to just work?

Try Feed Bob with your team

Upload your AI chats, team docs, and research. Export everything as context for any AI tool. Start building your team's shared memory today.

Try for Free →Free tier available • No credit card required